You've prepared your script, warmed up your voice-over artist, set up your mic, and launched Audacity. Now you can record your narration.

Typically, audio narration is recorded longform – that is, you record a large segment – maybe 10 minutes or so in one take. If you make an error, or mispronounce a word, I recommend that you simply go back to the start of the sentence or phrase and start again. There is no need to stop recording. Make an audio note for yourself by saying something like “I’m going to take that again” before repeating the phrase to be redone and carrying on with the narration.

It's as well to point out now that everyone fluffs their lines: get used to it. Only the very best professional voice-over artists will get everything right in one long take, and even they will never get it right first time, every time. In my view, your audio content will benefit from the narrator getting the four 'P's' (pace, pitch, projection, and pausing) right, than attempting to record a piece in one go.

Once your recording is complete, save the file. This file is called the source or master file. I usually append this file with the term “_master” as in myAudio_master.wav.

Now, import your master file into your editor and re-save your file as myAudio_edit.wav. This version of the file is the working version, where you will apply digital signal processes and make your edits.

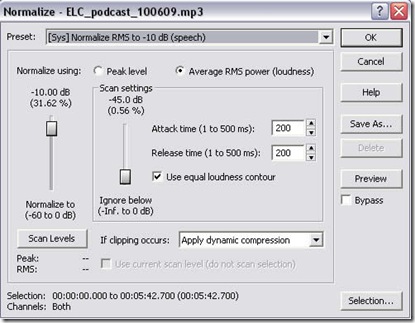

First of all, the file is normalized (see Figure 1). Normalization is the process of increasing (or decreasing) the amplitude (often erroneously called "volume") of an entire audio signal so that the peak amplitude matches a desired target. Typically, normalization increases the amplitude of the audio waveform to the maximum level that does not introduce any new distortion.

Figure 1. Normalization dialog box with process optimized for speech (-10dB)

[Click to enlarge]

In the normalization process, a constant amount of gain (increase in audio signal amplitude) is applied to the selected region of the recording to bring the highest peak to a target level, usually 98% (-0.3 dB) of the maximum 0dB. Normalization differs from dynamics compression, which applies varying levels of gain over a recording to fit the level within a minimum and maximum range. Normalization applies the same amount of gain across the selected region of the recording so that the relative dynamics (and signal to noise ratio) are preserved.

The normalization process usually requires two passes through the audio clip: the first pass determines the highest peak, and the second pass applies the gain to the entire recording.

Next, Noise Reduction is applied (see Figure 2). Noise reduction (NR) is the process of removing unwanted noise from an audio signal. All recording devices, both analog or digital, have traits which make them susceptible to noise. Noise types include ambient noise, which is generated in the recording environment (for example, active air conditioning), or noise can be random (white noise), or coherent noise (introduced by the recording device electronic or mechanical components).

Figure 2. NR dialog box

[Click to enlarge]

In electronic recording devices, a major form of noise is hiss, which is caused by random electrons that, heavily influenced by heat, stray from their designated path. These stray electrons influence the voltage of the output signal creating detectable noise. Laptop-based recording is particularly susceptible to this form of noise, due to the close proximity of heat sources like the CPU and heat sink to the machine's soundcard.

In the analog world magnetic tape noise is introduced because of the grain structure of the tape medium itself, as well as by crossover noise from moving parts in tape motors.

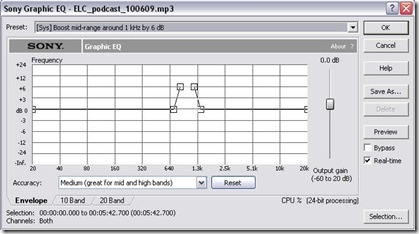

Finally, the audio is equalized (see Figure 3). Equalization (EQ) is the process of using digital algorithms or electronic elements to alter the frequency response characteristics of a sound signal.

Figure 3. Full-range Graphic Equalizer

[Click to enlarge]

At its most basic level, many consumer sound playback devices have a 'Bass' and 'Treble' tone controls to enhance the top- and bottom-end frequencies of a recording. Digital audio editors have much more sophisticated equalizing capabilities.

Once you have undertaken these pre-editing processes, save your working (or edit) file.

More...

--

No comments:

Post a Comment