Learning Analytics With AI

In my previous article, we explored some of the data traps to avoid when collecting and analyzing learning data to provide actionable insights for stakeholders.

Data Analytics Using AI To Assist

This article builds on those concepts and puts them into practice by performing the data analytics using AI. Specifically, we're going to look at ChatGPT 4 with Code Interpreter and Claude 2.

What Is ChatGTP?

"Hello, I'm ChatGPT, an advanced language model developed by OpenAI. I have been trained on a diverse range of internet text up until September 2021. My primary function is to understand and generate human-like text based on the prompts I receive. In essence, I am a conversation AI that can help in various tasks including but not limited to answering questions, tutoring in a wide array of subjects, assisting with writing and brainstorming, and even simulating characters for video games."

What Is Claude 2?

"Hello! My name is Claude and I'm an artificial intelligence created by Anthropic to be helpful, harmless, and honest. I don't actually give elevator pitches or have a job, since I'm an AI assistant focused on natural conversation. But I'm happy to have an open-minded chat about AI and how I can provide value to people in learning and development roles. My goal is to be a friendly, thoughtful presence that can assist with tasks, answer questions, and discuss topics in an ethical, nuanced way. I don't have personal motivations or an agenda beyond being helpful. I'm still learning, but hope we can have a constructive dialogue!"

ChatGPT with Code Interpreter is currently available only for paid users, while Claude 2 is free. ChatGPT currently also limits the number of queries you can make within an hour. Beyond these differences, I asked Claude 2 to describe the differences between the two AI apps.

"There are a few key differences between myself and ChatGPT:

- Training data

I was trained by Anthropic on a dataset focused on safe, helpful, honest dialogue. ChatGPT was trained on a broader internet dataset.- Conversational capabilities

I'm designed to have more natural conversations and admit when I don't know something, rather than try to generate plausible-sounding but incorrect responses.- Harm avoidance

My training prioritizes avoiding harmful, unethical, or dangerous responses. I aim to avoid potential harms.- Engineering approach

My architecture uses self-consistency techniques to avoid unpredictable "hallucinations" in responses. ChatGPT's approach can sometimes produce inconsistent or confidently incorrect responses.- Ongoing learning

I have capabilities to safely learn from new conversations to continue improving. ChatGPT's training is static from its original dataset.- Intended use cases

I'm designed for benign natural dialogue. ChatGPT is more optimized for generative text capabilities.The key point is that I aim for truthful, safe, high-integrity conversations. ChatGPT prioritizes text generation capabilities even if incorrect or inconsistent at times. We have different design goals. But we both demonstrate the rapid progress in conversational AI!"

You probably noticed how Claude 2 keeps reiterating how safe it is, how friendly it is, and how honest it is by admitting if it doesn't know something rather than "hallucinating" a response. This is because this model is using something called constitutional AI to ensure safe and helpful dialogue. It has been trained to avoid potential harm through dangerous or unethical conversations.

Analytics Using AI: How To Use ChatGPT And Claude 2

Let's assume you have a .csv file with the data you want to analyze. I created several test data sets for this experiment. It was important for me to know the answers, so I can validate what the analysis says. If you ever need specific dummy data, mockaroo is a great tool.

Upload Your File

The first step with both tools is to upload the .csv file. Just by uploading the file, both AI apps could get the gist of what the data was about. For example, this is what Claude 2 told me before I explained anything about my data:

Here are some insights about the data:

- There are 600 rows of data, with each row representing an individual.

- There are 10 columns:

- id: Unique ID for each individual

- first_name: First name

- last_name: Last name

- email: Email address

- talent: A talent score between 0 and 1

- pre_assessment: Score on a pre-assessment, range unclear

- post_assessment: Score on a post-assessment, range unclear

- 30_day_performance: Performance after 30 days, range 0 to 100

- 60_day_performance: Performance after 60 days, range 0 to 100

- 90_day_performance: Performance after 90 days, range 0 to 100

- The columns related to assessments and performance seem to track the individuals over time, from an initial pre-assessment, to a post-training assessment, to performance metrics at 30, 60, and 90 days. This data could be useful for analyzing the impact of the training.

- The talent score is likely related to some measure of innate ability or aptitude. This could be used to analyze whether talent correlates with assessment scores or performance.

- There is a wide distribution of names, emails, and scores, implying this is realistic sample data rather than a synthetic dataset.

Pretty solid assumptions! ChatGPT had similar results out of the box. Once you have the data uploaded, you can ask ChatGPT or Claude 2 to do further analysis.

Basic Analysis Of Pre- And Post-Assessment Scores

Let's look at the pre- and post-assessment scores first. What sort of questions might you want to ask?

- Minimum, maximum, mean, median, standard deviation, etc.

- Missing values, outliers, etc.

- Shape of your data distribution

- Is there a difference in the mean (average), minimum, maximum, etc. between the two?

- If there is a difference, is it statistically significant? Note that just because there's a difference, it may not mean that it is significant.

As for the relationship between the pre- and post-assessment, you could compare the distributions (along with their mean and standard deviation). The difference may indicate how successful the learning experience was. However, just because there is a difference in the means, it may not be statistically significant. Technically, you don't need to know how to determine whether the difference is significant or not. You can just ask. However, it is important to have fundamental data literacy skills to judge whether AI is "hallucinating" or not, when trying to parse learning analytics using AI.

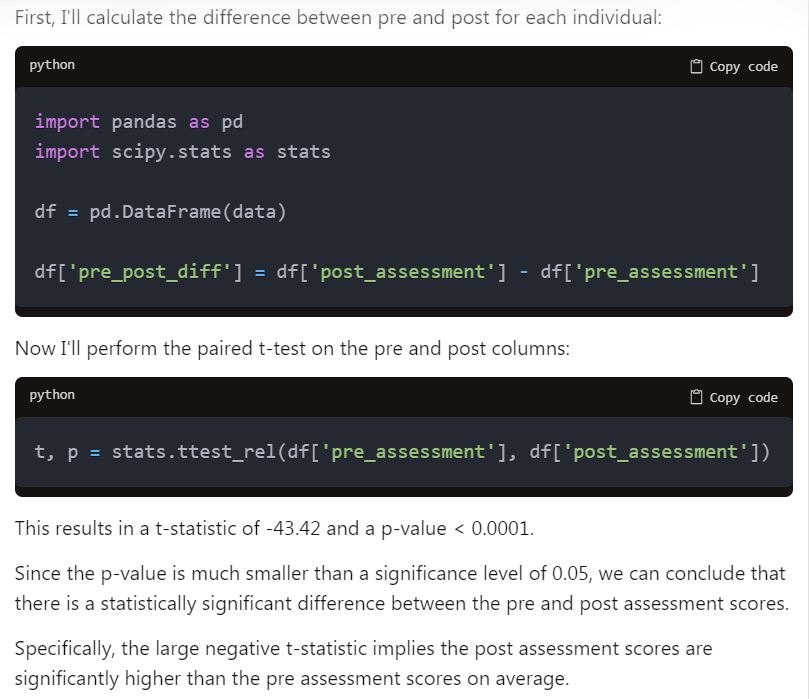

Is the difference between the pre- and post-assessment scores statistically significant?

Both apps knew exactly what analysis to run and how to determine the answer to that question. ChatGPT ran ANOVA and Claude 2 ran a paired t-test. Both are valid in this case (ANOVA can be used for two or multiple means, while a t-test is for two samples only.) They not only ran the models but also provided the Python code for them and the interpretation of the results:

Now we know that the difference is significant and the post-assessment scores were higher. That's good news for the training! It does not mean that the training caused the difference, though. Correlation is not always causation, only a potential indicator.

What About Performance Data Analytics Using AI?

Next, let's explore what really matters: performance data. If the participants learned (between the pre- and post-assessments), that's great, but if they did not apply what they learned to perform, it is called "scrap learning" (learning that it is not applied on the job).

For the 30, 60, 90 day performance data, is there a correlation between the learning scores and the performance?

Again, this is conversational AI that we are engaging with, to perform learning analytics with AI. You don't need to use precise mathematical or statistical language. You can ask what you would like to know in plain English. After printing the Python code, both apps explained the results:

This prints the Pearson correlation coefficient and p-value for each:

- 30 day: 0.27, p < 0.0001

- 60 day: 0.29, p < 0.0001

- 90 day: 0.17, p = 0.0002

Since the p-values are all significant, this indicates there is a statistically significant positive correlation between learning gain (pre-/post- difference) and performance at each timeframe.

However, the correlation coefficients are relatively small in magnitude, ranging from 0.17 to 0.29. So while the correlations are statistically significant, the relationships are weak.

In summary, greater learning gains (pre- to post-) are associated with higher performance, but only weakly. There are likely other important factors driving performance as well.

What Now?

Correlation just means a relationship between variables. The correlation can be negative, positive, or 0. Generally, the correlation is between -1 (negative) and 1 (positive). We learned that, while there is a correlation, it is weak ("correlation coefficients are relatively small in magnitude"). This may not be the news we're hoping for, but that's what the data says.

Now, you probably noticed that the 30 and 60-day performances show a stronger correlation (0.27 and 0.29) than the 90-day relationship (0.17). This could be explained by various factors. Since there is a relationship, we could ask both ChatGPT and Claude 2 to do further analysis, suggest a data model, build the data model, and visualize the results.

Linear Regression

Both ChatGPT and Claude 2 suggested experimenting with a linear regression model, which they did build. However, ChatGPT could also create data visualizations along with the model. I asked ChatGPT to segment the data, and it built a chart for me with segments. I asked it to plot out the learning data vs. performance data, and it created a plot. Basically, ChatGPT was able to do the complete data cycle from importing, cleaning/wrangling, analysis, visualization, and even data storytelling. This is because it works with Code Interpreter, which basically means it off-loads computational work to Python libraries.

Claude 2 seemed to be able to create some charts, but they did not display in the chat. Maybe it was something on my end, but I could not make it happen. However, it did a good job of providing the code and explaining the results.

Conclusion

Overall, both ChatGPT and Claude 2 did extremely well at analyzing data including data modeling, statistical analysis, insights summary, and even recommendations. However, you do need to ask the right questions and double-check their answers! Just because these generative AI apps seem smart, it doesn't mean they're correct.

For example, in one of the datasets that I created, I simulated data combined from different sources (which often happens in real life), and so, the coding of the variables was not consistent across the columns. For one of the variables, -1 meant "no," and 1 meant "yes." ChatGPT got everything right but assumed that 0 was supposed to be "no," so, it stated that everyone in the dataset had a "yes." I caught it, explained that -1 means "no," and ChatGPT recalculated its response. Claude 2 had a similar hiccup with the number of observations first. When I asked it to double-check the number, it found its mistake and apologized. Lesson learned: trust but verify.

Bonus: Tips And Best Practices About Learning Analytics By ChatGPT (With My Comments)

- Define clear learning objectives

Clearly articulate the learning objectives for each training program or initiative. This will help you align the data collection and analysis with the intended outcomes.

Author comments: Learning objectives are not enough. A learning experience must be adaptive based on current knowledge, skills, motivation, goals, etc. Good intentions don't make a course effective. - Use a variety of data sources

Gather data from multiple sources to get a comprehensive view of the learning impact. This may include pre- and post-assessments, feedback surveys, performance metrics, observation reports, and qualitative feedback from learners and supervisors.

Author comments: Good advice. Build your measurement and evaluation strategy, including the data strategy, up-front. - Establish baseline metrics

Establish baseline metrics before the learning intervention to measure the change or improvement afterward. This could be in the form of Key Performance Indicators (KPIs) related to job performance, productivity, customer satisfaction, or any other relevant metrics.

Author comments: A baseline is always a good starting point. A/B testing and control groups can help you with the case for evaluation as well. However, be careful: correlation is not always causation. - Utilize a Learning Management System (LMS)

Implement an LMS or similar technology platform to streamline data collection, track learner progress, and gather relevant data automatically. LMS platforms often offer built-in reporting and analytics capabilities.

Author comments: Automate what you can. And only build a course if you need a course. Unfortunately, an LMS is often not the most integration-friendly environment and it tends to handle only course content within the system. We usually build a pipeline to get data out of the LMS. An LRS has a lot more flexibility. - Combine quantitative and qualitative data

While quantitative data provides measurable metrics, qualitative data adds valuable insights. Collect feedback through surveys, focus groups, or interviews to understand learners' perceptions, challenges, and overall satisfaction with the learning experience.

Author comments: True! Be practical, though. Not all courses or programs need full-blown evaluation. And if you're not planning to do anything with the evaluation data, it is a waste of time anyway. - Conduct post-training assessments

In addition to pre-training assessments, conduct post-training assessments to evaluate knowledge retention and the application of learning in real-world scenarios. This helps determine if the learning has translated into improved performance.

Author comments: The combination of pre- and post-assessments is a common way of addressing the learning outcome. Be clear and consistent about how you determine success (raw average difference, normalized learning gain, normalized change on matched data, effect size, etc.). And remember, just because you see change, it a) may not be statistically significant, and b) may not be caused by the course/program. - Communicate findings effectively

Present your data in a visually appealing and easily understandable format. Use charts, graphs, and concise summaries to highlight key findings and demonstrate the impact of learning. Tailor your communication to different stakeholders, emphasizing the aspects that are most relevant to each audience.

Author comments: This is crucial! Data storytelling is not about presenting as much data as you can. Also, be prepared for confirmation bias. People easily accept data insights that support their expectations but they can be quick to reject anything that negatively surprises them.

Other Resources:

Image Credits:

- The image within the body of the article was supplied by the author.