Yesterday we worked on where we could improve or include different types of interactions in your e-learning course for maximum learning impact.

Yesterday we worked on where we could improve or include different types of interactions in your e-learning course for maximum learning impact.

Sticking with interactions, today we’re going to zoom in and focus on the options and (equally importantly) the feedback in those interactions.

When it comes to writing questions and options (whether that’s in a simple multiple choice or in another activity such as a drag-and-drop) I like to remember the Goldilocks rule: they need to be not too hard, not too easy, but just right. It’s all about finding the right balance – so the learner is faced with a challenge, but not an impossible task.

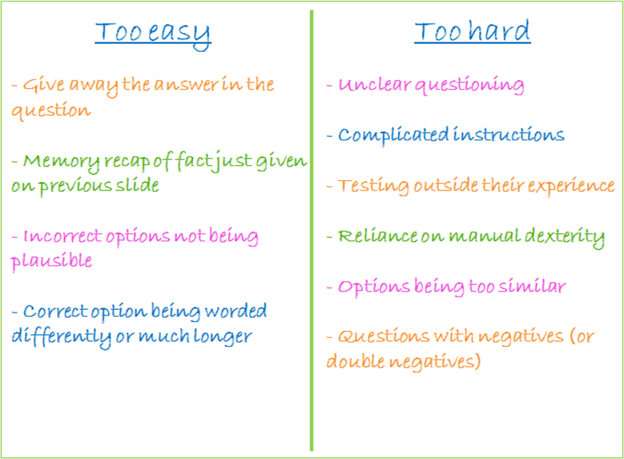

During the webinar, I asked the participants to brainstorm the kinds of things that can make an interaction either too easy or too hard. Here’s what they came up with.

I’m sure you can come up with many more examples of common mistakes that render a potentially good interaction next to useless. Unfortunately there’s far too much even just in the selection above to fix in the one day we have allocated, so let’s focus on some of the ones that can be most quickly and easily fixed and will have the biggest impact on the effectiveness of your interactions.

- Re-word questions so they don’t give away the answer

Or, alternatively, re-word the options so the correct one doesn’t simply repeat part of the question. I really hope your course doesn’t include examples of this, but these things do happen so let’s get them out of the way right at the beginning. This is pretty straightforward and an easy one to begin with: questions that effectively answer themselves are not worth having in there. Remember that this doesn’t just apply to quiz questions – you need to review the statements or options in drag-and-drops, matching pairs or any other ‘testing’ interaction and carry out whatever re-wording is necessary to eliminate any overlap in wording or phrasing.

- Make all options equally plausible

We’ve all seen questions or activities that are laughably easy because some or most of the options simply aren’t plausible or because the right answer sticks out like a sore thumb. Some of the more glaring mistakes you will be able to pick up yourself, so take the time to work through your course and check for some of the common offenders: lazy ‘all of the above’ answers, extreme differences in length between correct and incorrect options, ridiculous options that seem to be there just to make up the numbers, and so on.

But I’d also recommend you get someone who doesn’t know the topic at all and doesn’t have the context of the surrounding content to take a look at your interactions. If they get all the answers right, that’s a pretty good sign that the options aren’t all equally plausible. It’s worth doing this for your knowledge test as well, if you have one – if someone who is new to the subject can pass the test without taking the course, you need to re-write your test!

So now you’re probably in a situation where you know which interactions need a bit of work in terms of the plausibility of options. This is the tough bit, I know – if there were implausible options, it’s probably because coming up with plausible alternatives wasn’t easy. My suggestion is to ask your external helper to stick around a little longer and give you a hand with this part too. It might be even better to get a separate helper for this part, who doesn’t know the subject and hasn’t seen the questions. Read each question out to them and ask them what they think the answer might be – without giving them the options. Their initial reaction and gut instinct may well give you an idea for that elusive last plausible option.

- Remove any trick options or reading tests

For me, one of the most frustrating situations is to be presented with a challenging question and several options which are (in theory) equally plausible, only one of which can actually be correct because of the grammatical construction. At the other end of the scale, I don’t want to be scratching my head trying to work out whether a double negative means it’s option B or option C, for example. This type of thing turns a potentially thought-provoking interaction into a reading test, and isn’t constructive.

There’s also a difference between plausible but incorrect options (which are challenging in the right way) and deliberate red herrings or traps. If you’re including something which is wrong but which you know lots of people do, that’s fine because it’s an inappropriate behaviour you are trying to address. But if you’re purposefully writing incorrect options which you hope people will ‘fall for’, I don’t think that’s fair.

So, take a breather and then come back to review your interactions with fresh eyes. In each case, ask yourself honestly whether you are following the Goldilocks rule and providing something which is challenging but not impossible or deliberately frustrating.

- Make feedback meaningful

I’d estimate that it’s around mid-afternoon now and all the effort you’ve put in yesterday and today means your interactions are fairly close to perfect! There’s just one more aspect that needs some attention, and that’s the feedback. For me, this is just as important as the activity itself. It’s also easy to refresh the feedback in your course as it’s usually just a case of editing some text.

First of all, then, what does good and bad feedback look like? I asked my hard-working webinar participants to come up with some examples.

I think they more or less have it covered. Take a close look at the feedback in your course. Does it tend to be little more than a tick or a cross? If so, think about how you can expand on it to provide some additional learning and explain why something is the right answer. Imagine that one of your learners happened to guess the right answer – what they need is feedback that explains why that’s the case and will help it stick. On the other hand, all the work we’ve done so far means that the incorrect options are quite plausible, so of course some learners will select them. It’s only fair to explain to them why they’re wrong.

Does your feedback often simply repeat the correct answer in the same words? I’m not sure this is the most effective approach. Let’s return to that learner who guessed the right answer: if the feedback is worded the same way it’s probably going to wash over them, whereas phrasing it slightly differently might help develop their understanding so that next time it’s not just a guess. Likewise, if someone didn’t get the right answer, perhaps explaining it in a different way in the feedback will resonate with them in a way that the original phrasing didn’t.

One thing I would have liked to discuss on the webinar is the pros and cons of having the same feedback for all options, correct or incorrect. I think this can work, if it’s done well – so that all options are covered and the feedback makes sense in all situations – but I can’t quite decide whether it’s a slightly lazy approach. We didn’t have time to discuss this at the time but perhaps it’s something to pick up in the discussion box below.

Once you’ve checked and improved the feedback throughout your course, you’re done for the day! Today we’ve taken our examination of your interactions to the next level, ensuring the options and feedback are as useful, fair and constructive as possible. See you again tomorrow, when we’ll be looking at how to inject a bit of character into your course.

Hi Steph,

Thanks for (as always) a great post, with lots of ‘calls to action’!

One point that you mentioned that I would like to open up for discussion is

“if someone who is new to the subject can pass the test with out taking the course, you need to re-write your test!”

I would also suggest that before considering a potential rewrite, that it might also be an idea to consider whether the entire activity is required? Of course basing that decision on a single test case would be unwise, but perhaps if a realistic test group were all able to provide correct, reasoned responses then it may provide evidence to those requesting the activity that the requisite knowledge, skills and behaviours are already widespread and that what is needed is something other than an online course?

And then of course there are the compliance activities…. Don’t get me started on them!

😉

Hi Craig. Sorry it took me a little while to respond – a post every day is more time-consuming than I thought! I completely agree with you that there’s no point putting the time and effort into rewriting something without making sure it’s still needed. That’s why I made sure to say in my post last Friday that one of the assumptions I was making for this set of posts was that you’ve already made sure there is still a need for the e-learning course. I think your idea of using a test group to complete the knowledge test or assessment is a great one for helping to establish whether a refresh of the course is appropriate or whether a different approach or resource is needed. As for compliance courses, I know how you feel about them, but (rightly or wrongly) they are probably here to stay and so it’s up to us in L&D to find ways to make them more enjoyable (and effective) experiences, even if we don’t agree with them in principle. And, I know I’ve said it before, but some of my favourite projects I’ve worked on have been compliance courses!

Pingback: Five days to better e-learning: recap | Good To Great